Chapter 6

8 Essential usability testing methods for actionable UX insights

There are various usability testing methods available, from lab-based usability testing to in-house heuristic evaluation—in this chapter, we look at the top techniques you need to know when running a usability test. We explore the difference between quantitative and qualitative data and how to choose between moderated and unmoderated usability testing, and pick the right UX research method.

What to do before your usability test

Before we get into the different usability methods available, let’s look at how to prepare for your usability test. Here are our top tips to consider before delving into your testing period:

- What’s your goal?

- What results do you expect?

- Who will conduct the test?

- Where are you finding participants?

- What tool are you using (if any)?

- How will you analyze the results?

Once you’ve thought about the above questions, it’s time to move onto the final decision.

- What method are you using?

Selecting your usability testing method is a challenging, but critical, decision. Work with your team and research experts to determine the best method to achieve the insights you’re looking to gather.

To help make your choice, here’s our breakdown of usability testing methods.

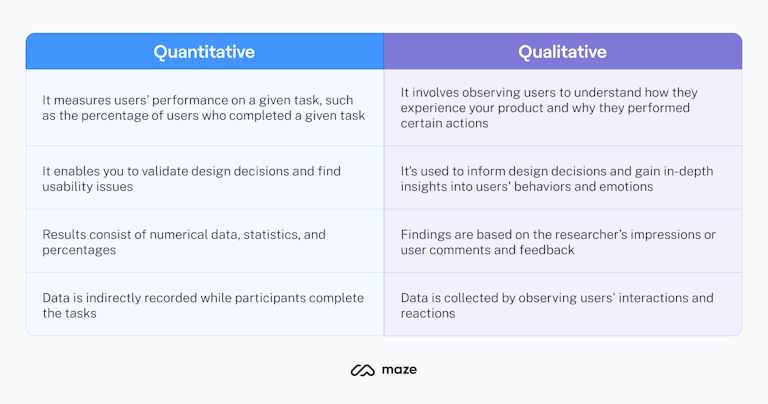

Quantitative vs. qualitative usability testing

Anytime you collect data when usability testing, it's going to be in one of two types of studies: qualitative or quantitative. Neither is empirically better, though there are specific use-cases where one may be more beneficial than the other. Most research benefits from having both types of data, so it's important to understand the differences between the two methods and how to employ each best. Think about them as different players on the same team. Their goal is the same–gain valuable insights–but their approach varies.

We do both types of usability testing: qualitative and quantitative. I think it's really important to have a mix of both. Quantitative testing gives us hard numbers, and those metrics are key in making data-driven design decisions. On the other hand, qualitative testing is incredibly useful because you get the voice of the user, and they're not just a "metric".

Matt Elbert, Senior Design Manager at Movista

In the end, qualitative and quantitative usability testing are both valuable tools in the user research toolkit, but which one works for you will depend on your research goal.

The difference between qualitative and quantitative data

All usability testing involves participants attempting to complete assigned tasks with a product. Though the format of qualitative and quantitative tests doesn't change that much for the participant, how and what kind of data you collect will differ significantly.

Qualitative data

Qualitative data consists of observational findings. That means there isn't a hard number or statistic assigned to the data. This type of data may come in the form of notes from observation or comments from participants. Qualitative data requires interpretation, and different observers could come to different conclusions during a test.

The main distinction between quantitative and qualitative testing is in the way the data are collected. With qualitative testing, data about behaviors and attitudes are directly collected by observing what users do and how they react to your product.

In contrast, quantitative testing accumulates data about users' behaviors and attitudes in an indirect way. With usability testing tools, quantitative data is usually recorded automatically while participants complete the tasks.

Examples of qualitative usability data: product reviews, user comments during usability testing, descriptions of the issues encountered, facial expressions, preferences, etc.

Quantitative data

Quantitative data consists of statistical data that can be quantified and expressed in numerical terms. This data comes in the form of metrics like how long it took for someone to complete a task, or what percentage of a group clicked a section of a design, etc.

With quantitative data, you need the context of the test for it to make sense. For example, if I simply told you, "50% of participants failed to complete the task," it doesn't give much insight as to why they had trouble.

Examples of quantitative usability data: completion rates, mis-click rates, time spent, etc.

When to do qualitative or quantitative testing

You can cut your grass with a pair of scissors, but a lawnmower is far more efficient. The same is true with qualitative and quantitative usability testing. In most scenarios, you could use either to collect data, but one will be better depending on the task at hand.

The type of data you collect will depend a lot on the goal and hypothesis of your test. When you know what you want to test and you identify the goals of your test, you will know what type of data you need to collect.

Vaida Pakulyte, UX Researcher and Designer at Electrolux

Below we provide some example scenarios for both. It's not meant to be exhaustive, but representative of when you may employ one type of testing over the other.

Quantitative usability testing: Measuring user experience with data

With quantitative testing, the goal is to uncover what is happening in a product. Quantitative testing works well when you're looking to find out information about how your design performs, and if users encounter major usability problems while using your product.

For example, let's say you just released a reminder function in your app. You can run a test where you ask participants to set a reminder for a day in the week. You want to know if participants are able to complete the task within two minutes. Quantitative usability testing is great for this scenario because you can measure the time it takes for a participant to complete the task.

Let's say you find only 30% of participants are able to complete the task within two minutes. Now that you have that data, you can study the heatmaps of the journey the user takes to understand what usability issues the user has encountered when trying to complete the task. Or, you can follow-up the quantitative study with a few user interviews to dive deeper into the experience of those users who've struggled to complete the task.

Product tip ✨

Maze automatically collects quantitative data such as time spent, success rates, and mis-click rates, and gives you heatmaps for each session so you can dive deeper into the test results and improve the UX of your product. Try it out for free.

Qualitative Usability Testing: Understanding the why behind actions

Qualitative user testing enables you to understand the reason why someone does something in a product and research your target audience's pain points, opinions, and mental models. Qualitative usability testing usually employs the Think out loud method during the testing sessions. This research technique asks the participant to voice any words in their mind as they're completing the tasks.

This way, you get access to users' opinions and comments, which can be very useful in trying to understand why an experience or design doesn't work for them, or what needs to be changed. As you collect more qualitative data, you may start to discover trends among users, which you can use to make changes in the next design iteration.

Qualitative and quantitative user testing perform best when used in conjunction with one another. So, whereas they are separate, thinking about them as two pieces of a whole may be the best approach.

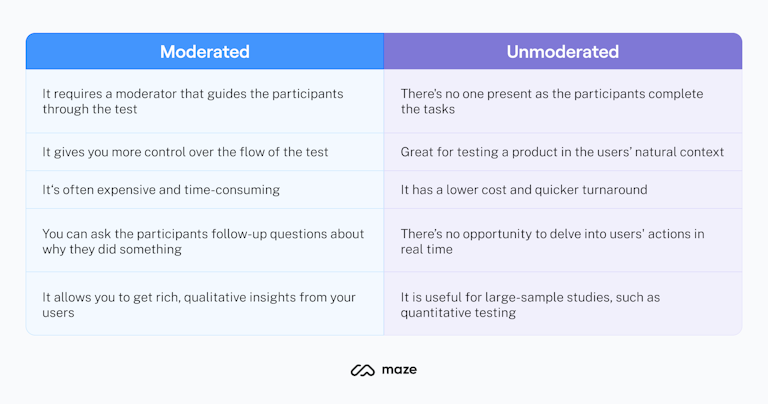

Moderated vs. unmoderated usability testing

The mantra of usability testing is, "We are testing the product, not you."

Carol Barnum, Usability Testing Essentials: Ready, Set...Test!,

When running a usability study, you have to decide on one of two approaches: moderated or unmoderated. Both are viable options and have their advantages and disadvantages depending on your research goals. In this section, we talk about what moderated and unmoderated usability testing is, the pros and cons of each, and when to use each type of usability testing.

Moderated usability testing

As the name suggests, in a moderated usability test, there's a moderator that's with the participant during the test to guide them through it. The role of the moderator is to facilitate the session, instruct the user on the tasks to complete, ask follow-up questions, and provide relevant guidance.

Moderated usability tests can happen either in-person or remotely. When it's done remotely, the moderator usually joins through a video call, and the participant uses screen sharing to display their screen, so the moderator can see and hear exactly what the participant is doing during the test.

You should be careful not to implement your bias into the user test. Phrasing your questions and your tasks to allow for clear, open-ended, and non-biased responses is very important.

Matt Elbert, Senior Design Manager at Movista

When running a moderated usability test, it's important to be aware of a few best practices. The first is not to lead the participant towards an answer or action. That means you have to carefully phrase questions in a way that prompts users to complete the tasks but leaves enough room for them to find out how to do it. Make sure you're not asking them things like "Click here" or "Go to that page." Even if the intention is good, these types of instructions bias the results.

Another best practice to keep in mind is to encourage participants to explore the product or prototype as it naturally comes to their mind. There is no wrong or right answer—the goal of usability testing is to understand how they experience your product and improve accordingly. As a moderator, you should clearly explain that the thing being tested is the product, not the user.

I know the strong desire to leap to a user’s aid, defend your design, and convince the world you’re right. It’s okay to let users struggle, to accept moments of silence or thought, and let a design fail a test. Interrupting that process might be one of the worst things you can do for your design.

Taylor Palmer, Product Design Lead at Range

Examples of moderated usability tests are lab tests, guerrilla testing, card sorting, user interviews, screen sharing, etc.

The benefits of moderated usability testing

One of the biggest advantages of moderated usability testing is control. Since these tests are guided, you're able to keep participants focused on completing the tasks and answering your questions. If you're conducting the experiment in a lab, you're able to control for environmental factors and make sure those don't skew your results.

Most importantly, the biggest benefit of moderated tests is that you're able to ask the participant follow-up questions about why they did something. In an unmoderated test you're sometimes left guessing, so having the chance to dive deeper into an issue or question with participants helps you uncover learnings about user behavior and pain points.

By moderating the usability tests, I can observe body language and understand the pain points better. I also get to know the user, build trust, and show that their insights are truly important to the team and me.

Vaida Pakulyte, UX Researcher and Designer at Electrolux

For example, if you're running a moderated session to test a new user interface for a check-out process—and notice a participant struggling with a part of the process—you can ask them what they thought about the process, how they would improve it, and why they struggled to use the product. Such opportunities rarely arise in an unmoderated session, making moderated usability testing essential if you're looking to get rich, qualitative insights from your users.

The disadvantages of moderated usability testing

Moderated tests require investment, both in terms of resources like a tool or a lab to organize the tests, but also an investment of time. Moderated usability testing sessions take time to plan, organize, and run, as each individual session needs to be facilitated by a researcher or someone with experience in the field.

With these constraints, your pool of possible participants may also shrink. Finding participants to come to your lab or join a user interview call can be a hassle, so usually, you can only collect qualitative user feedback. For those reasons, moderated user tests only work at the start of the UX design process, usually when doing formative research.

When to run moderated usability testing

Moderated tests work best at the initial stages of the design process, as they allow you to dig deeper into the experience of the participants, and get early feedback to inform the overall direction of the design.

You can run moderated usability tests with low- to mid-fidelity prototypes or wireframes to collect users' opinions, comments, and reactions to a first iteration of the design. At this stage of the process, you'll usually be testing the information architecture, the layout of the webpage, or simply do focus groups to research if your solution works with real users.

By asking usability testing questions before, during, and after the test, you and your team can uncover insights that help you make better design decisions.

As you move through the design process, you can continue doing moderated usability tests with users after each iteration, and based on the results, design the final iteration.

Unmoderated usability testing

An unmoderated usability test happens without the presence of a moderator. The participant is given instructions and tasks to complete beforehand, but there's no one present as they're completing the assigned tasks.

Unmoderated user tests happen mostly at the place and time of the participant's choosing. Similar to moderated testing, you can run an unmoderated test either in-person or remotely. Depending on your resources, one might be better than the other. We look at remote vs. in-person usability testing in more detail in the next chapter.

Examples of unmoderated usability tests are first-click tests, session recordings, eye-tracking, 5-second tests, etc.

Product tip ✨

Maze allows you to run unmoderated tests with unlimited users. Get started by importing your prototype into Maze.

The benefits of unmoderated usability testing

One of the advantages of unmoderated usability testing is that it has a lower cost and quicker turnaround. The obvious reason for this is that you don't have to hire a moderator, find a dedicated lab space, and look for test participants who are willing to come to your lab.

Along those same lines, unmoderated remote tests are a bit more advantageous as the participant can complete the assigned tasks at the time and place of their choosing. The convenience of having a participant complete tasks in an uncontrolled environment is that it more closely resembles how someone would use your product in a natural environment, thus yielding more accurate results.

Last but not least, one of the biggest benefits of unmoderated usability testing is the ability to collect results from a larger sample size of test participants. Because you don't have to moderate each session, measuring usability metrics, or doing A/B testing is much easier in an unmoderated environment.

Unmoderated tests are useful for large-sample studies, such as quantitative testing, or when I need the results quicker.

Vaida Pakulyte, UX researcher and Designer at Electrolux

With unmoderated remote usability testing, you can collect results in hours or even minutes. Plus, testing with a global user base in different time-zones is possible with unmoderated usability testing.

The disadvantages of unmoderated usability testing

On the other hand, unmoderated usability testing has a couple of drawbacks you'll need to keep in mind when choosing this method. Since unmoderated tests happen without your presence, they might be limiting in the types of insights and data you can gather as you won't be there to delve into users' actions in real time.

When to run unmoderated usability testing

When you need to collect quantitative data, consider doing an unmoderated usability test. In those scenarios, you're looking for statistically relevant data, so testing a large sample size is faster and easier with unmoderated tests.

Additionally, unmoderated usability testing works best towards the end of the product development process. When you finish designing a final solution, you can run an unmoderated test with a high-fidelity prototype that resembles the final product. This will ensure the solution works before you move on to the development process.

Another use case for unmoderated usability testing is measuring the performance of tasks within the product. For instance, examples of tasks for users are: sign up, subscribe to the newsletter, create a new project, etc. For each of these tasks, you can run quick unmoderated usability tests to make sure the user flow is intuitive and easy to use.

Unmoderated tests are usually done when remote testing using a combination of prototyping and testing tools. These allow you to create a test based on a prototype, share links to the test with participants, and even hire testers from a specialized testers' panel.

6 additional usability testing methods

Lab usability testing

In a lab usability test, participants attempt to complete a set of tasks on a computer or mobile device under the supervision of a trained moderator who observes their interactions, asks questions, and replies to their feedback in real-time.

As the name suggests, lab usability testing takes place in a purpose-built laboratory. Typically, this has two rooms divided by a one-way mirrored window to allow note-takers and observers to watch the test without being seen by the participants. Sessions can also be recorded for later review and in-depth analysis.

One main advantage of lab usability testing is that it provides extensive information on how users experience your product. Since there is a moderator, you can collect more qualitative data to get in-depth insights into users’ behaviors, motivations, and attitudes.

On the downside, this type of testing can be expensive and time-consuming because you need a dedicated environment, test participants, and a moderator. Also, it usually involves a small number of participants (5-10 participants per research round) in a controlled environment, with the risk of not being reflective of your user base.

When to use lab usability testing: If you’re looking to maximize on in-depth, extensive feedback from participants

Contextual inquiry

The contextual inquiry method involves observing people in their natural contexts, such as their office or home, as they interact with the product the way they would usually do. The researcher watches how users perform their activities and asks them questions to understand the reasons behind those actions.

As the research takes place in the users’ natural environment, this method is an excellent way to get rich, reliable information about users— their work practices, behaviors, and personal preferences. These insights are essential at the beginning of a project when evaluating requirements, personas, features, architecture, and content strategy. However, you can also use this method after a product release to test the success and efficiency of your solution.

There are four main principles of contextual inquiries:

- Context: Interviews are conducted in the user's natural environment

- Partnership: The researcher should collaborate with the user, observe their actions, and discuss what they did and why

- Interpretation: During the interview, the researcher should share interpretations and insights with the user, who may expand or correct the researcher's understanding

- Focus: It's important to plan for the inquiry and have a clear understanding of the research goal

When to use contextual inquiries: If it’s important that results reflect an organic scenario in a user’s real-world circumstance

Remote usability testing

Remote usability testing is a research method where the participant and the researcher are in different locations. In a remote test, the participants complete the tasks in their natural environment using their own devices. Sessions can be moderated or unmoderated and can be conducted over the phone or through a usability testing platform like Maze.

Among the main benefits of remote usability testing, there's the opportunity to recruit a large number of participants coming from different parts of the world. Also, this method is faster and cheaper than in-person testing. However, you'll have less control over the test environment and procedure overall. That is why it's crucial to choose the right tools and devices.

When to use remote usability testing: If you want to test a large group of users, or it’s important to test in multiple user locations

Phone interview

A phone interview is a remote usability test where a moderator instructs participants to complete specific tasks on their device and give feedback on the product. All feedback is collected automatically as the user’s interactions are recorded remotely.

Phone interviews work best to collect feedback from test participants scattered around different parts of the world. Another benefit is that they are less expensive and time-consuming than face-to-face interviews, allowing researchers to gather more information in a shorter amount of time.

When to use phone interviews: If you need to gather information from participants in different locations, in a short amount of time

Session recording

Session recordings are a fantastic way to see exactly how users interact with your site. They use software to record the actions that real, anonymous visitors take on a website, including mouse movement, clicks, and scrolling. Session recording data can help you understand the most interesting features for your users, discover interaction problems, and see where they stumble or leave.

This type of testing requires a software recording tool such as Maze. To get the most out of your results, you should also consider combining session recordings with other testing methods. In this way, you can gather more insights into why users performed certain actions.

When to use session recordings: If you want to see how users naturally interact with and navigate your product

Guerrilla usability testing

Guerrilla usability testing is a quick and low-cost way of testing a product with real users. Instead of recruiting a specific targeted audience to participate in the research, participants are approached in public places and asked to perform a quick usability test in exchange for a small gift such as a coffee. The sessions usually last between 10 and 15 minutes and cover fewer tasks than some other approaches.

This usability testing method is particularly useful to gather quick feedback without investing too much time and resources and works best at an early stage of the design process when you need to validate assumptions and identify core usability issues. However, if you need more finely-tuned feedback, it’s better to complement your research with other methods.

When to use guerrilla testing: If you’re looking for a low-cost testing method to gather results quickly

Moving forward

That’s it for our chapter on usability testing methods, head onto our next chapters for in-depth breakdowns of remote usability testing and guerrilla testing, plus how to write your test questions.

Learn more about usability testing with Maze 🎯

Test and validate usability across your wireframes and prototypes with real users to get early feedback on your design process.

Frequently asked questions about usability testing methods

What is usability testing?

What is usability testing?

Usability testing is the process of evaluating how easy to use and intuitive a product is by testing it with real users. Usability testing usually involves getting participants to complete a list of tasks while observing and noting their interactions to identify usability issues and areas of improvement.

What are the benefits of usability testing?

What are the benefits of usability testing?

One of the benefits of usability testing is that you can fix usability issues before launching your product and make sure you create the best possible user experience. Ultimately, ensuring users can accomplish their goals smoothly will lead to long-term customer success..

In a nutshell, wireframe testing allows you to:

- Explore different early layout concepts

- Test your ideas rapidly and validate them with users

- Learn valuable information that you can use to guide the design of higher fidelity prototypes.

What are the different types of usability testing methods?

What are the different types of usability testing methods?

Quantitative or qualitative: quantitative testing measures users’ performance on a given task, such as the percentage of users who completed a given task. Qualitative testing involves observing users to understand how they experience your product and why they performed certain actions.

Moderated or unmoderated: in a moderated usability test, a moderator guides the participants through the test. In an unmoderated test, the participants receive instructions and tasks to complete beforehand, but there's no one present as they accomplish the tasks.

Remote or in-person: remote usability tests happen when the participant and the researcher are in separate locations. In-person tests take place in a testing lab or office under the guidance of a moderator.

Other types of usability testing methods include guerrilla testing, lab usability testing, contextual inquiry, phone interview, and session recordings.