“Show me where research has profited the bottom line”

“Why should we grow the team?”

“Prove your value”

If you’re a research leader, you’ve probably been on the receiving end of these phrases at some point over the last few years.

With budgets being tightened and layoffs on the rise, stakeholders aren't always sure of the true value of research. This leads product teams and people who do research (PWDRs) in a hamster wheel of constantly having to prove their delivery, value, and worth.

Throughout my career, leading user research at edX and User Interviews, and now overseeing the research partner function at Maze, I’ve witnessed just how vital it is to measure the impact of research on business outcomes—to help evangelize research, showcase the value of user insights, and market yourself internally.

Research impact is when the knowledge generated by UX research influences a person, product, strategy, or organization.

Victoria Sosik, Director of UX Research at Verizon

While it’s easy to get lost in the craft of UX research, I always make sure to carve out time to shift my perspective and re-center on UX research impact.

In this article, I’ll explore why measuring research impact is critical to your team, and provide a framework for how you can get started measuring impact and proving the value of UXR internally.

Why is UX research important?

At its core, user research is about learning. We conduct research to learn about our users’ behaviors, needs, and pain points. We use those learnings to inform decisions and take action. Research for the sake of research just leads to insights collecting dust in a desk drawer. To conduct research with purpose you need to start with a robust strategy, and finish by overviewing the impact of your work.

As a researcher or UXR leader, a large part of your role is essentially running a marketing campaign on UXR.

Roy Opata Olende, Head of UX Research at Zapier

In Maze’s 2023 Research Maturity Report, we learned that organizations who leverage research at its highest potential gain 2.3x better business outcomes, including reduced time-to-market and revenue increase.

But that’s not all—research can be a powerful tool that brings a range of benefits to your team, like:

- Making data-informed decisions

- Reducing cognitive bias in the UX design process

- Testing and validating concepts

- Working on solutions that bring real value to customers

- Marketing your product internally and externally

Why should you measure UX research impact?

In my roles as Research Partner at Maze and Leadership Coach at Learn Mindfully, one of the most common things I hear from research leaders is the desire to make an impact. But what exactly does impact mean? Like many things, it depends on perspective.

I believe impact equates to value. As researchers, we want to be seen as valuable: to the business, our partners, and customers. So when we say we want to make an impact, we really mean we want to deliver value in all of these areas, and be seen as valuable in return.

Tracking UX research impact provides a mechanism to measure the value of our research practice. It can help to:

- Better identify research opportunities

- Know which projects had the most business impact

- Understand what worked, or didn’t, on previous projects

- Create a record of your wins for future team growth or career transitions

- Showcase your team’s work across the wider organization

Who does UX research impact help?

While its primary purpose is to prove the value of research (and thus your team), recording the impact of UX research actually helps multiple people across the business.

Yourself: Having an ongoing record of your impact not only builds self-confidence, but can serve as evidence of your professional development and growth. You could reference this during a performance review, interview for a new role, or your personal website. Consider it your own hype journal 🎉

Your team: Providing your research team with a catalog of their projects—and how they’ve impacted decisions around the organization—is a great way to improve morale, increase alignment, and motivate researchers by demonstrating the value of their work. I’ve seen first hand how it can help teams shift perspectives and celebrate all of the wins they've been driving.

The wider research function: Demonstrating the impact of UXR is a powerful tool to evangelize research and showcase how much research affects across the business. Highlighting this on a wider level can encourage investment in UX research, advocate for budget or headcount, and improve stakeholder buy-in for user research.

Users: Understanding the impact of research, from the first user insight to the final product design, is key in developing a user-centered culture that drives product decision-making. Highlighting the value of user research internally is ultimately highlighting the value of listening to users.

How often should you measure UX research impact?

How often you choose to review your UX research impact depends on a multitude of factors:

- Your organization’s research maturity

- How often you conduct research

- The size of your team

- Your UX research strategy and goals

- Business cycles (e.g. budgets) and review periods

- The length of your research projects

For example, a more research-mature organization with a fully-developed UX research function may conduct regular research and UX audits to track their impact. If you’ve got a solid research operations team, this can fall within their wheelhouse and streamline adding impact insights to your UX research repository.

On the flip side, a smaller company with a single UX researcher running longitudinal studies may find ease and value in reviewing research impact less-frequently.

I typically measure impact on a weekly, monthly, quarterly, and annual basis. I’ll reflect on what's working (or not working), and how our UX research has informed and influenced product decisions. This cadence and frequency works for my schedule and priorities, as tracking research impact is a key priority for my role.

Why is measuring impact hard?

In our Future of Research Report we found that 73% of product professionals currently track the impact of their research work, but connecting research to business outcomes remains one of the biggest challenges for PWDR. In my experience, this is due to a few reasons:

Research takes time

Conducting research can take time. Larger projects, generative and longitudinal studies like diary research can take time. I know I’ve run multi-method studies that have taken a year before they’re fully ‘complete’. I often learned something from one phase of the study that informed the next, and the next. Once you have the insights, you still have to apply the research.

The time from insight to informed decision to measurable impact can be months or even years. It’s not uncommon that I get messages from former teams I’ve worked on that the experiences I spent researching were finally getting implemented, two–three years later.

Over time, it’s also easy for documentation to be missed, roles to change, and new strategic priorities to take precedent over measuring impact.

There's intangibles in our work

While measuring usability testing or evaluative research has a clear-cut method, finding ways to assess strategic research presents a challenge. There are many intangibles when it comes to research—the excitement of an executive when they see a customer highlight reel, a product manager when they read your findings, the emotional impact on a user when they overcome a previous barrier. These more subjective and qualitative outcomes are far harder to measure.

Limited bandwidth

In-depth measurement of UX research impact takes time, and it becomes even more difficult if you're a UXR operating as a team of one or juggling multiple projects. In theory, this means tracking impact should be easier for larger teams with a ReOps partner, but in reality even fully-stacked research teams may be overwhelmed with requests and other priorities. Like the majority of UX research reporting, unfortunately impact simply gets forgotten, or shifted to be the lowest priority on the list.

No impact framework

Many research leaders and teams haven’t yet taken the initial steps of defining what impact actually means to them. Without a reliable UX research impact framework in place, it’s a lot harder to regularly track the impact of your work.

Put simply: you can’t measure what you don’t define.

A framework for measuring UX research impact

Now we’ve covered the roadblocks to tracking impact, let’s get into the weeds of how to measure impact.

To successfully track the impact of your UXR, you need a research impact framework. You can create your own or use a template (keep reading for some freebies). The majority of teams find it quicker to slot their research program into an existing framework, and it’ll save you a lot of time.

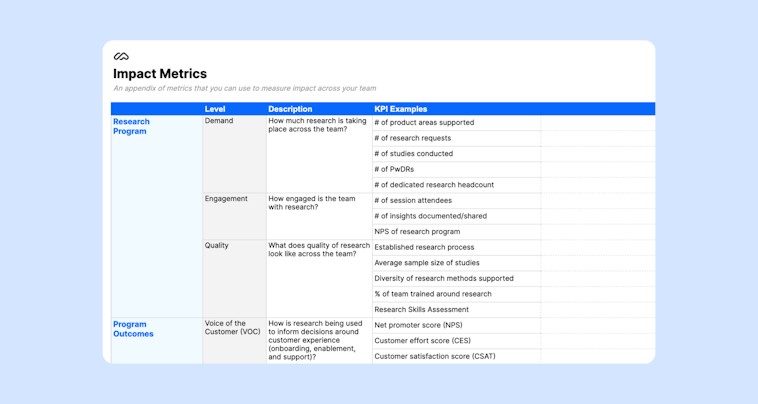

When I think about measuring research success, the research impact framework I use typically separates work into two key areas:

- Program design

- Outcomes

Let me explain what I mean by these.

Impact on program design

What I notice on many UX teams is that we often get caught up with what I call program design metrics. These are metrics related to your research program. We think about things like the number of studies we run, how engaged partners are with our work, or even the quality of our studies itself. I typically divide these into three core buckets:

- Demand: How much research is taking place across the team?

- Engagement: How engaged is the wider team with research?

- Quality: What does quality of research look like across the team?

While these are all great signals to show visibility into how the work is getting done, they focus on the ‘what’ rather than the impact the team is having. It’s important to go one level deeper…

Impact on outcomes

Our work as researchers is bound to outcomes. These can range from customer to product or business outcomes. Compared to program design metrics, they’re often more difficult to tie back to our work directly, or just simply take longer to measure.

They include:

- Voice of the customer: How is research being used to inform decisions across customer experience (e.g. onboarding, enablement, support). Think customer experience metrics, NPS, CSAT, ticket resolution and more!

- Product experience strategy: How is UX research informing product vision, user experience, or design directions? This can include usability metrics like SUS, time on task, error or success rates.

- Business: How is research informing business strategy decisions? Consider things like conversion rates, customer acquisition cost (CAC), churn rate, revenue, or bookings. This will more often apply to researchers who are working on market research or on growth teams.

By using a UX research impact framework that combines those program design metrics with key business and user outcomes, you can measure impact through a multi-dimensional lens that covers off the key components of research and its value.

✨ Want to cut to the chase and start measuring impact?

If you’ve read enough and want to get straight to it, download our free template for your research impact framework.

Step-by-step: How to track the impact of your UX research

So, you agree measuring impact is important, and you’re on board with the framework. What are the steps to put it into practice?

Step 1: Define what impact looks like

Since impact can mean different things to different people, it’s important you take the time to define what ‘impact’ means to your organization. I recommend facilitating a group discussion to identify what this means to you and your team. Then you can brainstorm some potential ways to measure this over time.

Step 2: Set intervals for review

When you measure impact may be influenced by what you measure. Personally, I try to create a habit and review impact metrics on recurring cadence. This includes during:

- Project planning and completion

- Weekly meetings or updates

- Quarterly and annual cycles

I recommend setting an initial cadence of about one quarter (three months) as an initial pilot. After the three months you can check in with things and identify what's working, not working, and how you might be able to improve measurement moving forward.

Step 3: Choose your location

Impact tracking can definitely be overly manual, depending on how robust your team is. I personally track everything in Google Sheets. I’ve met with other researchers who have used tools like Airtable, Notion, or JIRA. I definitely recommend picking a tool that your team already uses rather than trying to reinvent the wheel.

If your research team already has a UX research repository, consider housing key findings or putting your tracker within this, to centralize findings and streamline workflows.

💡 Choosing what the document and how to lay it out can be the most daunting part of tracking UX research impact, but you don’t have to create this from scratch. Download our free UX research impact tracker to help you out.

Step 4: Collect feedback and note your impact

Once you’ve picked your tool, you’ll want to add each research study to the catalog and document things like:

- Outcome: The outcome your work had—this is typically the decision that was made and any resulting data you have available.

- Impact size: You can use whatever you want for this—colors, percentages, fractions all work. I personally use t-shirt sizes to convey this (small, medium, large, XL etc.)

- Source files: These are links to the UX research plan and any artifacts/insight summaries. Consider including key UX research reports, snippets from user interviews, memorable quotes from diary research etc. to make an impact.

- Approach: This is a high level mention of the UX research methods you may have used, key research questions you had, and what the study looked like.

- Team lead: Self explanatory—share who the team lead was!

- Product area: This might be the name of the pods/squads you're working with or a specific product area you’re researching.

- Any relevant notes for yourself or the team

Step 5: Share the wins!

By now you should have a record of all of the amazing work you and your team has done. Next, you’ll want to share it more widely with your team. This may look like surfacing weekly updates via slack, async channels or quarterly presentations on the state of the team to your manager or executive team. The medium doesn’t matter as much as providing visibility into the outcomes you're driving.

On The Optimal Path Podcast, Roy Opata Olende, Head of UX research at Zapier, shared how he highlights impact with stakeholders: “Something I’ve started is a monthly report that isn’t focused on ‘here’s the work we’ve done’, but rather ‘what decisions research has impacted in the last month’. I speak to my team and stakeholders, ask them how they used the research, then write it up on our internal blog.”

I’m going to tell you exactly how this research impacted decisions, so we can continually remind people of the fact that if we didn’t have this research, we likely would have made different decisions.

Roy Opata Olende, Head of UX Research at Zapier

Ultimately, it’s about show-don’t-tell—UX research impact is about making the invisible, visible. It’s not enough to let our craft speak for itself—you have to bring stakeholders and executives along for the ride.

Free UX research impact framework templates

To round off, we’re sharing some of the top UX research impact frameworks I’ve come across. The great thing about existing frameworks is that you can take them as they are, or customize and rework them to fit your team’s needs. You might find a framework that will grow with your research function, or you might try a few out before creating your own. It’s all part of the journey—but starting with a template makes it a lot quicker!

UX research impact framework by Maze

Created based on my experience in research, the Maze research impact framework takes a holistic approach to defining and tracking the impact of UX research. It focuses on two key areas: research program design and outcomes, allowing you to quickly record research events and measure their respective impact.

This template has a clean, accessible design with a built-in impact metric appendix and impact tracker, so you can easily define, track and measure research activities—and share research wins with the wider business.

The 3 levels of UX research impact by Tao Dong

UX researcher at Google, Tao Dong, shared this research impact framework and it’s a clear, straightforward option. The framework is easily digestible and focuses more on the organization’s capacity to act on the research, rather than an individual researcher’s expertise or skills.

Multi-level research impact framework by Karin den Bouwmeester

This is a personal favorite—Karin den Bouwmeester’s framework for measuring UX research impact focuses on three core areas: customer impact, organizational impact, and user research outcomes. It’s a great choice if you’re looking to get a well-rounded review of everything research touches.

8 Ways to identify research impact by Victoria Sosik

Finally, we’ve got the research impact framework from Victoria Sosik, Director of UX Research at Verizon. This framework is a popular one in the industry, but can take some time to fully utilize. The framework highlights that defining impact requires nuance—you need to consider the activity that drove the impact, the impact itself, and the scale of the impact. These divide impact into three buckets: product, cultural, and internal.

Putting it all together: Bringing research to product design

When considering research impact, many practitioners think about the research program design or product strategy, but there's so many intangible areas that highlight the impact you’re having on your organization and customers.

Tracking UX research impact offers value across the board, from identifying product opportunities, to keeping a record of your team wins, and showing visibility of UXR impact across the wider organization.

Thinking about sitting down to review and measure impact after working on a long research project may feel overwhelming, but it’s a crucial factor in building out a UX research function and showcasing the amazing work a research team does. With a solid research impact framework in place, it doesn’t have to be difficult or time-consuming to measure research success.

Frequently asked questions about UX research impact

How to measure UX research impact?

How to measure UX research impact?

You can measure research impact by:

- Defining what impact means

- Setting intervals to review

- Choosing where to track/measure

- Keeping a record of your impact

- Sharing your wins

Why is UXR Important?

Why is UXR Important?

Research can be a powerful tool for you and your organization. It can help you make informed decisions based on data, reduce bias in the design process, test and validate your ideas, and work on solutions that bring real value to customers.

How do you show impact in UX research?

How do you show impact in UX research?

While the primary purpose of showing UX research impact is to prove the value of research, how you do it will depend on your organization and team. Some ways to show impact in UX research are quarterly updates, project retrospectives, keeping a personal wins journal from projects, and surfacing projects wins to the wider team and business.

How does UX research affect businesses?

How does UX research affect businesses?

In Maze’s 2023 Research Maturity Model, we learned that organizations that leverage research at their highest potential, gain 2.3x better business outcomes, including reduced time-to-market and an increase in revenue.

What is the outcome of research?

What is the outcome of research?

It depends on your impact measures, but generally research outcomes can fall into three core buckets:

- Voice of the customer

- Product experience strategy

- Business outcomes

What are the key metrics for evaluating the impact of UX research on product development?

What are the key metrics for evaluating the impact of UX research on product development?

The metrics you use to evaluate impact can vary based on how you define impact for your team. Check out our research impact tracker for some examples to help you and your team get started.